Introduction: Powering Low-Latency Computing with In-Memory Grids

As enterprises face increasing demands for real-time data processing and ultra-low latency performance, in-memory grid (IMG) technology has emerged as a key enabler of high-speed, distributed computing. By storing data in RAM across multiple servers instead of traditional disk storage, in-memory grids dramatically reduce access times and support high-throughput workloads.

This architecture is revolutionizing industries such as financial services, telecommunications, retail, and logistics, where immediate data insights and fast transaction processing are critical. In memory grid market is projected to grow to USD 15.24 billion by 2032, exhibiting a compound annual growth rate (CAGR) of 12.89% during 2024-2032.

How In-Memory Grid Works in Distributed Architectures

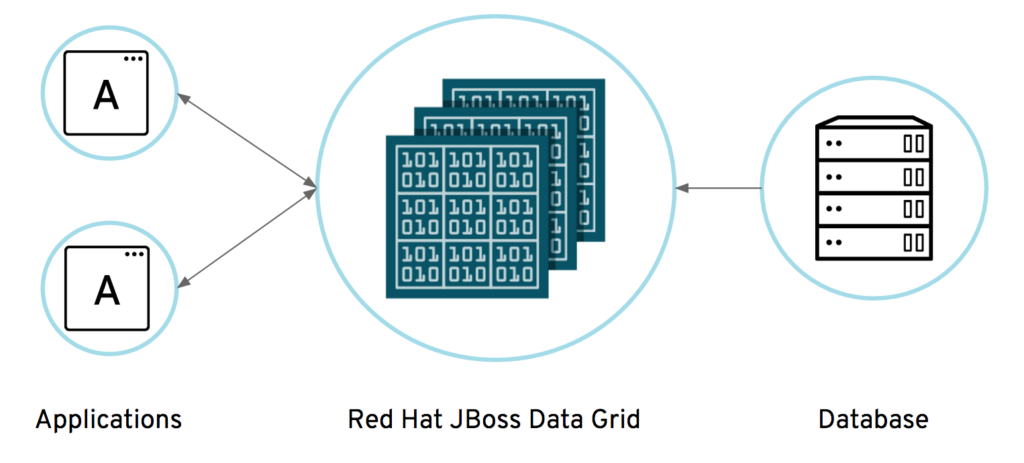

In-memory grids are designed to pool and manage memory resources from a cluster of servers, enabling the data to be processed close to where it resides. This grid-based system partitions data across nodes for parallel access, ensuring high availability and scalability.

With built-in load balancing, failover, and distributed computing capabilities, IMG platforms like Hazelcast, Apache Ignite, and Oracle Coherence can handle millions of operations per second without latency bottlenecks. This makes them ideal for mission-critical and time-sensitive applications.

Use Cases Across Industry Verticals

In the financial sector, in-memory grids are extensively used for real-time fraud detection, risk analytics, and high-frequency trading, where milliseconds matter. Telecom companies deploy IMG to manage user data in real time for billing and personalized services.

Retailers benefit from instant recommendations, dynamic pricing, and inventory management powered by real-time analytics. Logistics firms use in-memory grids for route optimization, shipment tracking, and predictive maintenance—all requiring high-speed data flow and decision-making.

Key Advantages Driving Adoption

The primary advantage of in-memory grids is speed. By eliminating the delays associated with reading from disk, IMG systems offer sub-millisecond response times. Additionally, they support massive concurrency, allowing thousands of simultaneous users or systems to access and process data.

In-memory grids also offer horizontal scalability, allowing businesses to scale seamlessly by adding more nodes. Advanced caching, data replication, and fault tolerance ensure data consistency and system resilience under high loads.

Integration with Real-Time Analytics and AI

In-memory grids are increasingly being paired with real-time analytics and machine learning systems. They serve as both the data layer and the execution engine for in-memory analytics, allowing businesses to make decisions on streaming data without persisting it first.

AI applications benefit from fast access to training data and real-time inference capabilities, especially in edge computing environments. IMG’s compatibility with Apache Spark and Kubernetes enhances its use in modern cloud-native architectures.

Challenges and Considerations

Despite their advantages, in-memory grids come with challenges. RAM is more expensive than disk storage, making memory-intensive solutions potentially costly at scale. Ensuring consistency across distributed memory can be complex, especially in multi-node or hybrid cloud environments.

Developers must also adapt traditional application logic to distributed computing principles, which can increase system complexity. Careful design, testing, and capacity planning are essential for successful deployment.

Future Outlook and Market Trends

The future of in-memory grids lies in tighter integration with cloud services, containerization, and AI pipelines. With RAM prices decreasing and NVMe memory gaining traction, the cost barriers are reducing.

Hybrid IMG systems that combine in-memory and persistent storage will enable tiered architectures that offer both speed and durability. As edge computing and IoT applications continue to grow, lightweight in-memory grid deployments will also gain popularity for real-time, decentralized processing.